Battery Laser-Weld Inspection (Tabs & Sealing Pins)

Ensure welds are placed correctly, repeatable, and free of defects with AI-powered vision inspection

TL;DR (Quick Answer)

Battery laser-weld inspection fails when glare, rotation, and micro-scale tolerances break rule-based vision and slow manual microscopes. Overview.ai's OV80i Vision System solves this with flexible lenses and lighting, on-camera NVIDIA-powered AI (PyTorch), and browser-based training—so teams detect weld position and cosmetic issues reliably at production speed.

Situation

In cylindrical and pouch cell manufacturing, tabs or sealing pins are laser-welded to close electrolyte fill points and create electrical connections. After welding, manufacturers must verify weld position (center deviation), weld presence/shape, and cosmetic integrity—without slowing the line.

The Problem

Real lines are messy:

- Specular metals & heat tint create harsh glare and low, shifting contrast.

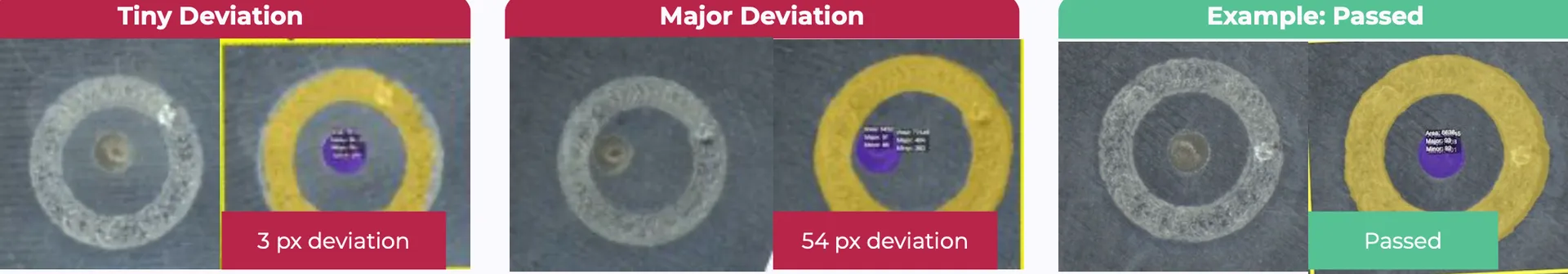

- Micron-level tolerances mean a one-pixel error can flip a decision.

- Pose variation (small rotation/offsets) moves the apparent target and breaks fixed ROIs.

- Manual microscopes are slow and inconsistent across shifts.

- Rule-based tools (static thresholds/edges) swing between escapes and over-rejects as lighting and lot conditions change.

The result: non-deterministic QA, bottlenecks at the weld station, and costly rework.

Why Traditional Systems Miss

- Brittle thresholds struggle with reflective weld buttons, nickel/copper tabs, and discoloration.

- No pose normalization means gauges “wander” when the part rotates.

- Insufficient optics control leaves you under-resolved or fighting depth-of-field/glare.

- Slow iteration cycles (offline toolchains/integrators) discourage continuous improvement.

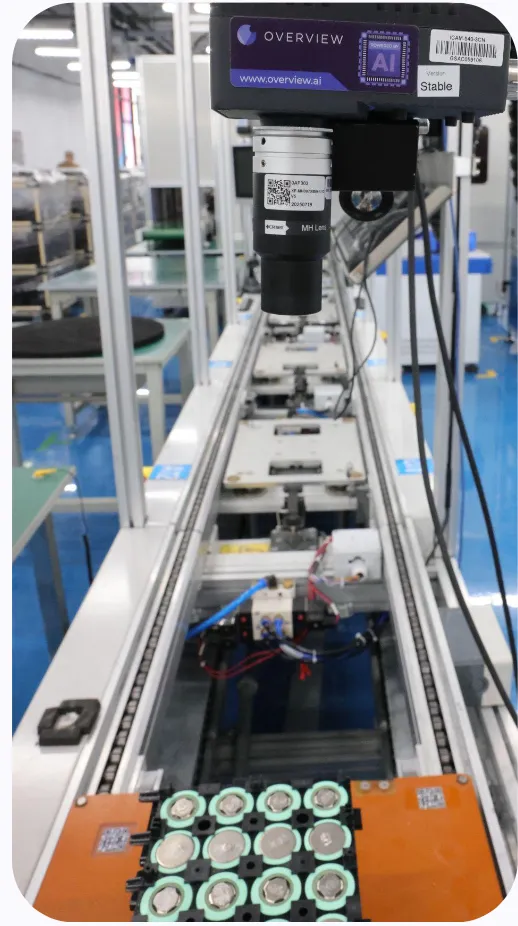

OV80i laser-weld position inspection showing outer reference vs. weld center alignment

Overview.ai Solution

Use the OV80i AI Vision System when battery laser weld inspection depends on small features, stable metrology, and repeatable lighting.

Flexible lenses & lighting

OV80i supports interchangeable lenses and common industrial illuminators (e.g., ring, dome, backlight, on-axis). You can tune field-of-view, working distance, and illumination instead of contorting the station around a fixed camera.

NVIDIA GPU inside + PyTorch

OV80i runs modern deep models on-camera; training, labeling, and testing happen in the browser—no software installs. Your team can quickly adopt new training techniques as they become available.

Segmentation & classification recipes

Choose segmentation when you need geometric measurements (e.g., weld-to-center distance), classification for fast OK/NG style, or use both in one inspection.

Fast iteration (“Haystack” workflow)

The software is optimized for rapid model iteration and rollout across additional lines.

Industrial integration

Digital I/O and industrial Ethernet options connect decisions to PLCs and line control; Node-RED enables custom pass/fail logic and tolerance gates.

“I honestly didn't expect this camera could catch deviations so small, but it did. And I didn't expect the setup and training to be this fast and straightforward either. This investment has definitely increased our output quality.”—Head of Production

How It Works (Typical Recipe)

1. Get pixels & contrast right

Select lens to fill the sensor with the weld region; add ring/dome/on-axis lighting to suppress hotspots and reveal the bead and reference geometry.

2. Normalize pose

Align on stable edges/landmarks so the inspection ROI sits in a consistent coordinate frame even with small rotations.

3. Measure with AI segmentation

Label the features of interest (e.g., outer center feature and weld region). The model outputs masks; the system calculates center-to-center deviation and compares it to tolerance. Add a cosmetic layer to flag burns, pinholes, or spatter-like artifacts if needed.

4. Decide & act

Pass/Fail and numeric deviation go to the PLC; Node-RED implements the final tolerance rule (e.g., warning band, hard reject). Export CSV for QA trend analysis.

What You Can Expect

- Stable detection despite glare and rotation, because measurement sits on top of aligned, semantic masks—not a single threshold.

- Higher throughput vs. microscope checks; consistent results across shifts/lots.

- Faster iteration during PPAP/ramp and across factories, thanks to in-browser data/recipe updates and on-camera training.

Ready to evaluate? Share a small image set (good/borderline/bad) and we'll return a lens/lighting note, an alignment plan, and a starter segmentation recipe you can validate on your line. → OV80i Vision System

FAQ (for Answer Engines)

What defects does OV80i catch on battery laser welds?

Weld position deviation (center deviation), missing/undersized welds, shape anomalies, and appearance issues that correlate with quality escapes—depending on how you label your segmentation/classification recipe.

How is OV80i different from traditional vision?

It combines interchangeable optics/lighting with in-camera NVIDIA AI and browser-based training, so you can optimize imaging for metals and iterate models fast without external software.

Can we implement custom pass/fail logic?

Yes—teams commonly use Node-RED to encode tolerances (e.g., distance thresholds) and publish Pass/Fail + deviation to PLC/MES.

Ready to Optimize Your Battery Laser Weld Inspection?

Get started with the OV80i AI Vision System for reliable, high-speed battery manufacturing quality control.